With the delivery of the U.S. Department of Energy’s (DOE’s) first exascale system, Frontier, in 2022, and the upcoming deployment of Aurora and El Capitan systems by next year, researchers will have the most sophisticated computational tools at their disposal to conduct groundbreaking research.

Exascale machines can perform more than a billion billion calculations per second, or 1018, which helped inspire a day of celebration on October 18, or 10/18.

Exascale Day honors scientists and researchers who use advanced computing to make breakthrough discoveries in medicine, materials sciences, energy, and beyond with the help of the fastest supercomputers in the world. It is also a day to celebrate the impact of high-performance computing at all levels.

Featured Content

Video 4:52

Video 0:52

Video 3:13

Exascale Day 2023: To celebrate those who keep asking what if, why not, and what’s next.

Building a Capable Computing Ecosystem for Exascale and Beyond

The ECP has been a key component of bringing

exascale to fruition, opening the door to

scientific discoveries beyond our current

comprehension.

Source: ECP

Exascale Drives Industry Innovation for a Better Future

Exascale is a massive accelerator for technology,

productivity, engineering, and science.

Source: ECP

Oak Ridge: Exascale’s New Frontier Series

A series exploring the applications and software

technology for driving scientific discoveries in

the exascale era.

Source: OLCF

Oak Ridge: Exascale Day 2023

Exascale computing is

transforming our ability to solve some of the

world’s most difficult and important

problems.

Source: OLCF

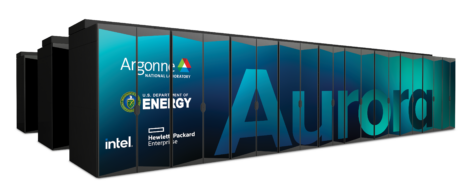

Argonne installs final components of Aurora supercomputer

The ALCF’s exascale machine is one step

closer to enabling transformative science.

Source: ALCF

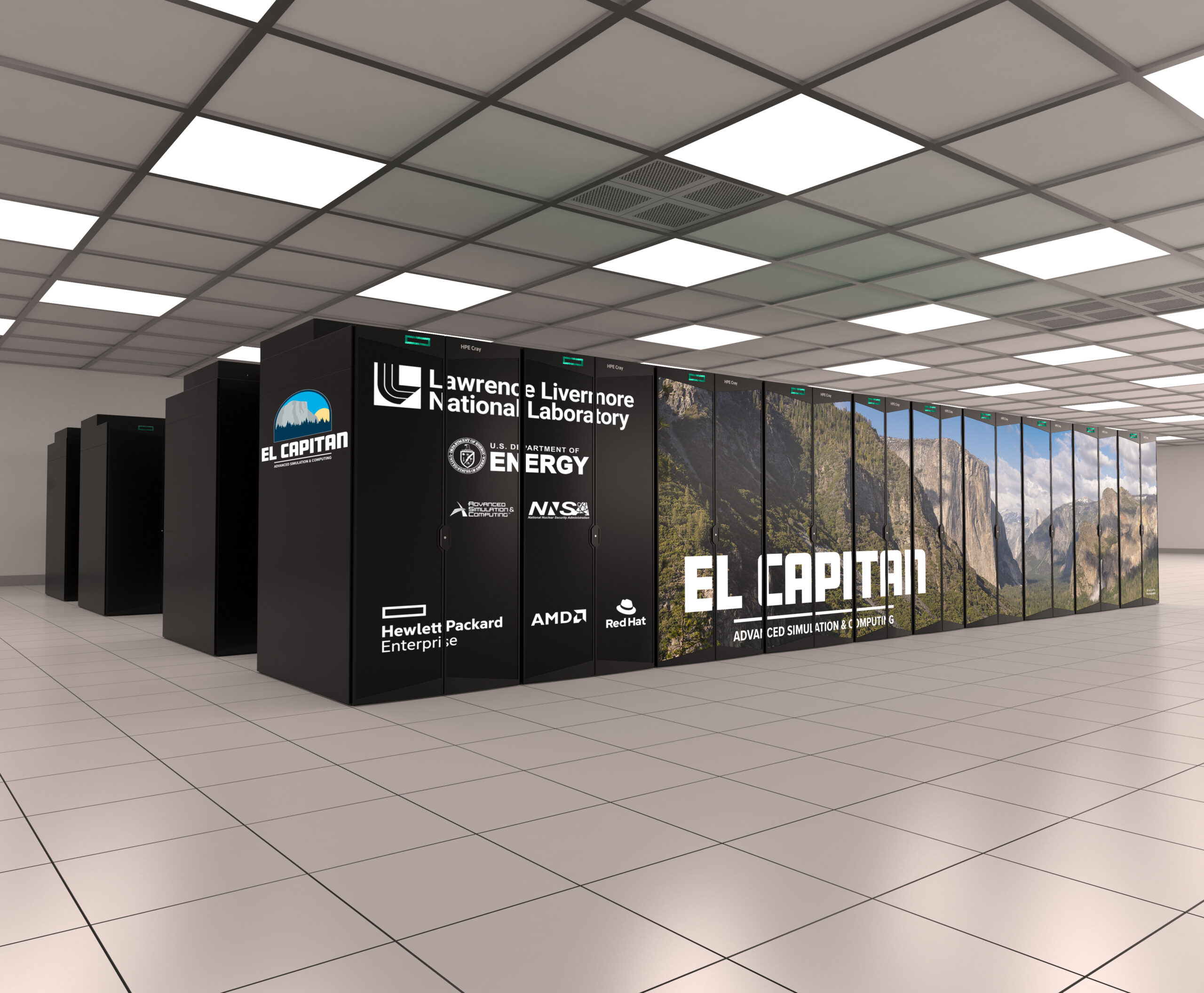

The

Road to El Capitan: Preparing for NNSA’s

First Exascale Supercomputer (Series)

This is the first article in a five-part series

about Livermore Computing’s efforts to stand up

the NNSA’s first exascale supercomputer.

Source: LLNL

LLNL scientists eagerly anticipate El Capitan’s potential impact

While Lawrence Livermore National Laboratory is

eagerly awaiting the arrival of its first

exascale-class supercomputer, El Capitan,

physicists and computer scientists running

scientific applications on testbeds for the

machine are getting a taste of what to

expect.

Source: LLNL

Powering up: LLNL prepares for exascale with massive energy and water upgrade

A supercomputer doesn’t just magically appear,

especially one as large and as fast as Lawrence

Livermore National Laboratory’s upcoming

exascale-class behemoth El Capitan.

Source: LLNL

– Exascale: Are we ready for the next generation of supercomputers? Technology Untangled

– How Argonne’s Sunspot Testbed is Helping Advance Code Development for Aurora Code Together Podcast

– How DAOS Enables Large Dataset Workloads on Aurora Code Together Podcast

– Siting the El Capitan Exascale Supercomputer at Lawrence Livermore Lab Let’s Talk Exascale

– Exascale: The New Frontier of Computing The Sound of Science

Mike

Bernhardt

Strategic Communications

Consultant

Akima Infrastructure

Services, LLC (AIS)

Supporting the Exascale

Computing Project

bernhardtme@ornl.gov

Website

Katie

Bethea

Oak Ridge Leadership

Computing Facility

Outreach and

Communications Group

Leader

Oak Ridge National

Laboratory

betheakl@ornl.gov

Website

Justin

Breaux

Social Media

Manager

Argonne National

Laboratory

jbreaux@anl.gov

Website

Beth

Cerny

Head of Communications

for Argonne Leadership

Computing

Facility

Argonne National

Laboratory

bcerny@anl.gov

Website

Aaron

Grabein

Senior PR

Manager

AMD

512-602-8950

Aaron.grabein@amd.com

Website

Bats

Jafferji

Communications Manager,

Super Compute

Intel

Bats.jafferji@intel.com

Website

Scott

Jones

Communications

Manager

Computing and Computational

Sciences, ORNL

jonesg@ornl.gov

Website

Nick

Malaya

Principal Engineer,

Exascale Application

Performance

AMD

512-981-6660

nicholas.malaya@amd.com

Website

Julie

Parente

Head of Communications,

Computing, Environment

and Life

Sciences

Argonne National

Laboratory

jparente@anl.gov

Website

Charity

Plata

Computational Science

Initiative,

Communications

Brookhaven National

Laboratory

cplata@bnl.gov

Website

Carol

Pott

Computing Sciences Area

Communications

Manager

Communications Liaison

to the SciData

Division

Lawrence Berkeley National

Laboratory

cpott@lbl.gov

Website

Paul

Rosien

HPC/AI Customer

Evangelism &

Community

Programs

HPE

888-342-2156

Website

Jeremy

Thomas

Public Affairs

Office

Lawrence Livermore National

Laboratory

925-337-4976

thomas244@llnl.gov

Website

Perspectives

This collection of quotes represents the voices of the HPC community—scientists, researchers, application, software, and system developers, and industry opinion shapers, offering their perspectives on the impact of exascale computing and the contributions of the Exascale Computing Project—the diverse, collaborative team of more than 1,000 contributors responsible for developing the world’s first capable exascale computing ecosystem.